HTCondor Withstands CERN’s Massive Stress Test

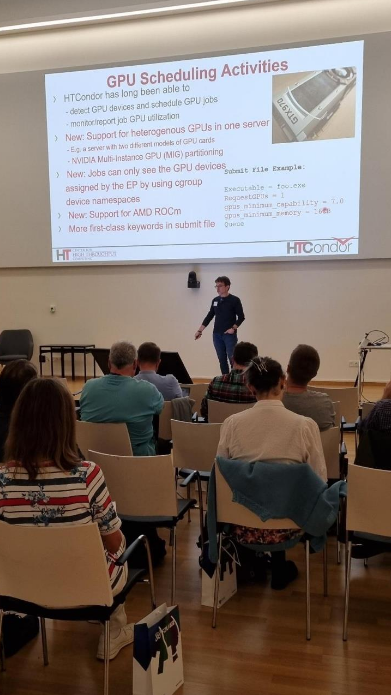

2025 HTCondor European Workshop Showcases Advances in High Throughput Computing

HTCondor European Workshop 2025

HTCondor Celebrates 40 Years Powered by Community

HTC Strikes Again

OSPool Is a Game Changer: HTC25 Keynote Presentor Erik Wright

PATh Becomes NAIRR Pilot Service Provider for AI Workloads

Reaching Record Numbers of Contributing Institutions to the OSPool

HTC News Shorts: February 2025

HTC News Shorts: January 2025

Fostering Community Amongst Developers and Researchers: A Reflection on the 2024 European Autumn HTCondor Workshop

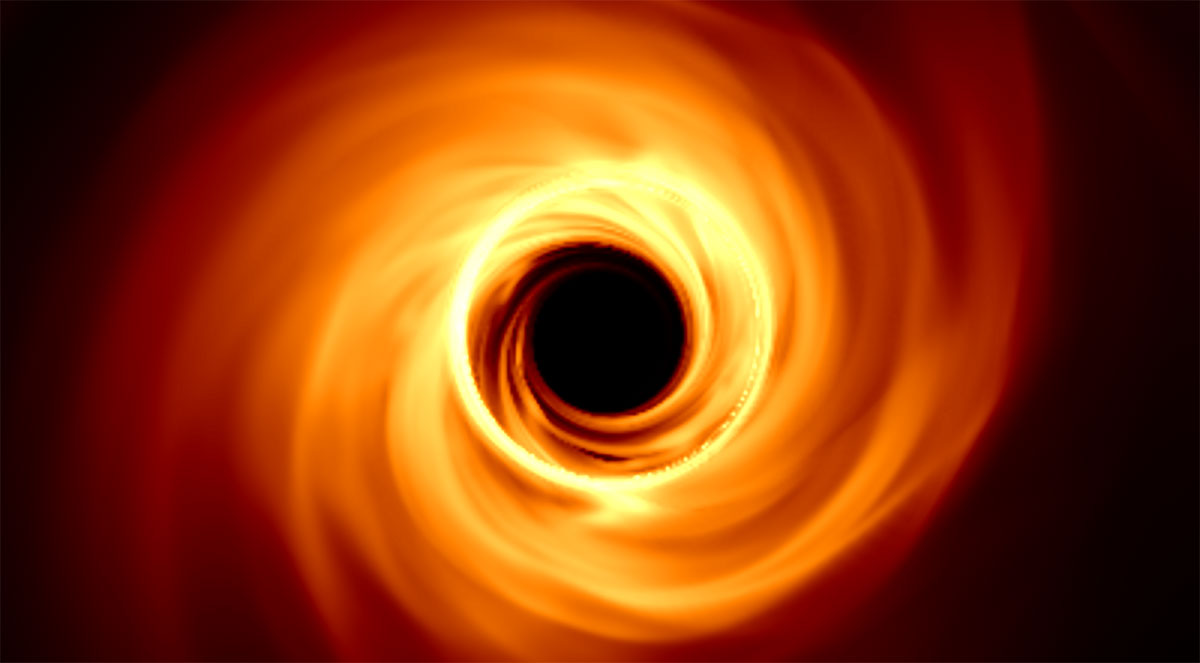

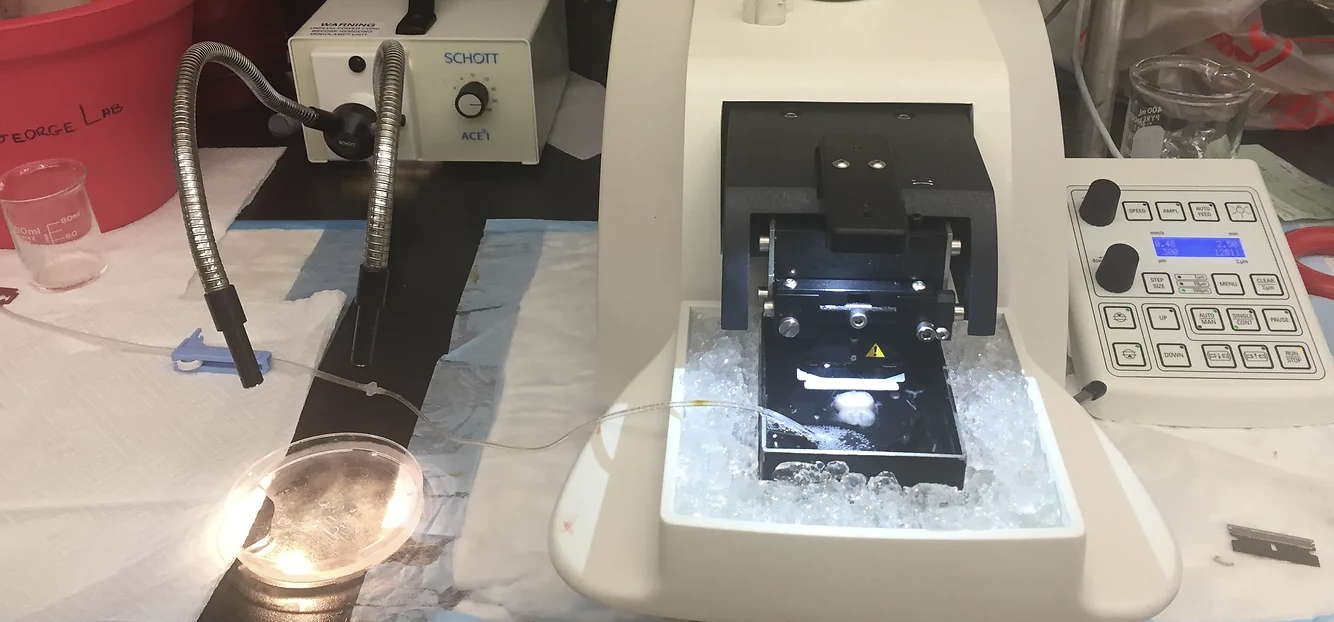

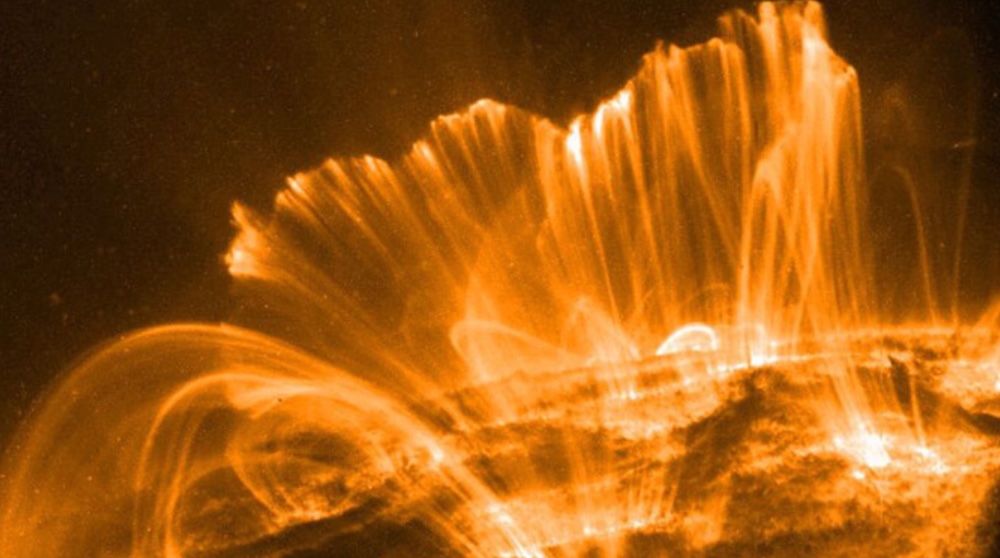

Decoding the Sun’s Secrets With the Help of Pelican

Student Fellows Advance Research Computing Infrastructure at CHTC

A Spotlight on Cole Bollig: Finding CHTC, Community, and Learning HTCondor

European HTCondor Workshop: Abstract Submission Open

Share your experiences with HTCSS at the European HTCondor Workshop in Amsterdam!

High Throughput Community Builds Stronger Ties at HTC24 Week

CHTC Launches First Fellow Program

Registration is open for the European HTCondor Workshop, September 24-27

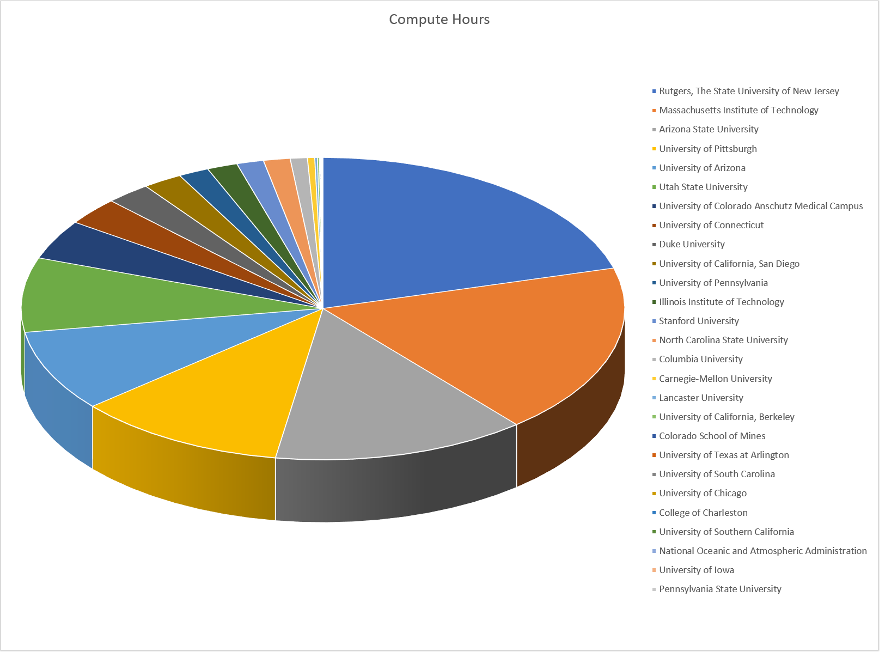

Advancing computational throughput of NSF funded projects with the PATh Facility

Since 2022, the Partnership to Advance Throughput Computing (PATh) Facility has provided dedicated high throughput computing (HTC) capacity to researchers nationwide. Following a year of expansion, here’s a look into the researchers’ work and how it has been enabled by the PATh Facility.

Save The Date for the European HTCondor Workshop, September 24-27

Join Us at Throughput Computing 2024, July 8 - 12

Don’t miss this opportunity to connect with the High Throughput Computing community.

Save the Dates for Throughput Computing 2024

Don’t miss this opportunity to connect with colleagues and learn more about HTC.

Tribal College and CHTC pursue opportunities to expand computing education and infrastructure

The American Museum of Natural History Ramps Up Education on Research Computing

The Pelican Project: Building a universal plug for scientific data-sharing

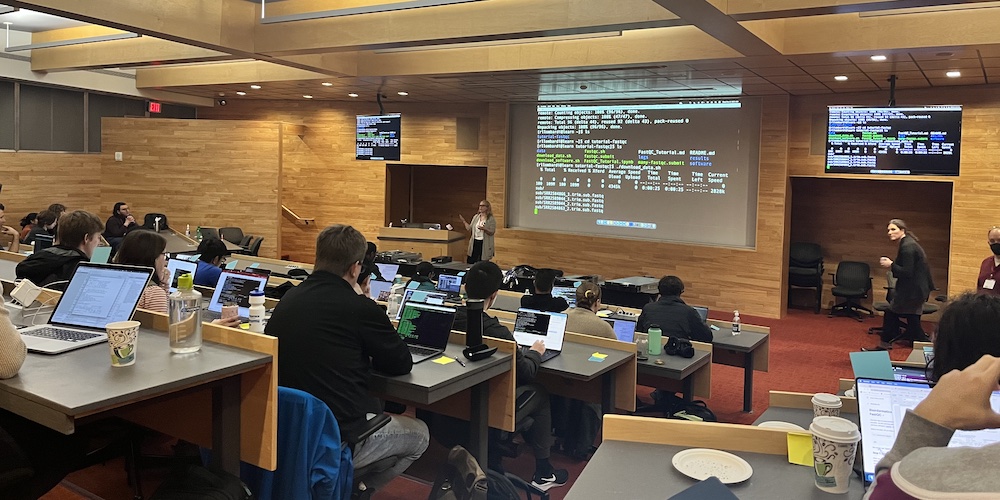

CHTC Launches First Introductory Workshop on HTC and HPC

HTCondor European Workshop returns for ninth year in Orsay, France

Throughput Computing 2023 Concludes

Thank you to all in-person and virtual participants in Throughput Computing 2023. Over the course of the event we had 79 talks spanning tutorials, applications and science domains using HTCSS and OSG Services. In addition to the talks, and in spite of the rain, we enjoyed many opportunities to socialize and continue to strengthen the HTC community.

Throughput Computing 2023 Begins - Join Online

Online registration is open for Throughput Computing 2023, please join us virtually by registering at the link below:

Registration Extended for Throughput Computing 2023

Registration for Throughput Computing 2023 has been extended to July 20th!

2023 European HTCondor Workshop

Construction Commences on CHTC's Future Home in New CDIS Building

The CHTC Philosophy of High Throughput Computing – A Talk by Greg Thain

Get To Know Student Communications Specialist Hannah Cheren

Registration Opens for Throughput Computing 2023

Registration for Throughput Computing 2023 is now open!

Save the dates for Throughput Computing 2023 - a joint HTCondor/OSG event

Don't miss these in-person learning opportunities in beautiful Madison, Wisconsin!

Get To Know Todd Tannenbaum

CHTC Leads High Throughput Computing Demonstrations

OSG User School 2022 Researchers Present Inspirational Lightning Talks

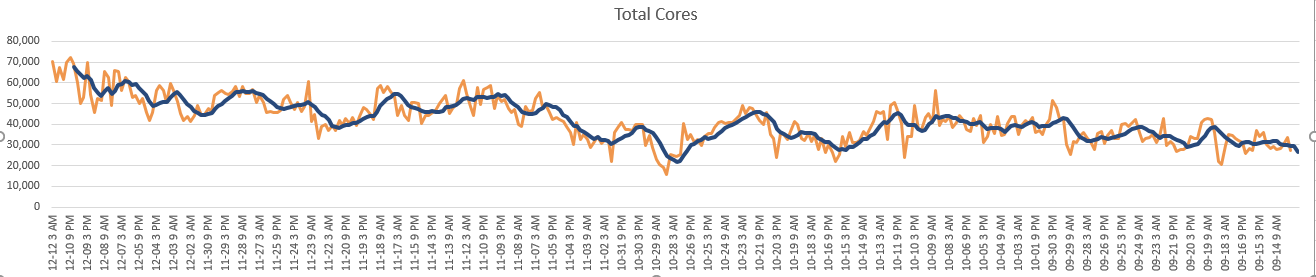

OSPool's Growing Number of Cores Reaching New Levels

Campuses contributing to the capacity of the OSPool led to record breaking number of cores this December, 2022. On December 9th, the OSPool, which provides computing resources to researchers across the country, crossed the 70,000 cores line –– for the very first time.

CHTC Facilitation Innovations for Research Computing

High-throughput computing: Fostering data science without limits

UW–Madison's Icecube Neutrino Observatory Wins HPCwire Award

Over 240,000 CHTC Jobs Hit Record Daily Capacity Consumption

The Center for High Throughput (CHTC) users continue to be hard at work smashing records with high throughput computational workloads. On October 20th, more than 240,000 jobs completed that day, reporting a total consumption of more than 710,000 core hours. This is equivalent to the capacity of 30,000 cores running non-stop for 24 hours.

PATh Extends Access to Diverse Set of High Throughput Computing Research Programs

Solving for the future: Investment, new coalition levels up research computing infrastructure at UW–Madison

Registration now open for HTCondor workshop in Europe

We are pleased to announce the European HTCondor Workshop 2022. The workshop will take place as an ‘in-person event’ from Tuesday 11 October 09:00 h to Friday 14 October at lunchtime.

Retirements and New Beginnings: The Transition to Tokens

Plan to replace Grid Community Toolkit functionality in the HTCondor Software Suite

Plan to replace Grid Community Toolkit functionality in the HTCondor Software Suite The HTCondor Software Suite (HTCSS) is working to retire the use of the Grid Community Toolkit libraries (or GCT, which is a fork of the former Globus Toolkit). The functionality provided by the GCT is rapidly being replaced by the use of IDTOKENS, SciTokens, and plain SSL within the ecosystem. Currently, HTCSS uses these libraries for:

HTCondor European Workshop Dates Released

We are very pleased to announce that the 2022 European HTCondor Workshop will be held from Tuesday 11th October to Friday 14th October. Save the dates!

Introducing the PATh Facility: A Unique Distributed High Throughput Computing Service

HTCondor Week 2022 Concludes

Thank you to all in-person and virtual participants in HTCondor Week 2022. Over the course of the event we had over 40 talks spanning tutorials, applications and science domains using HTCSS.

All slides can be found on the event website and we will be publishing all videos on our youtube channel under "HTCondor Week 2022".

We hope to see you next year!

Speaker line-up for HTCondor Week 2022 announced

Registration for HTCondor Week 2022 now open!

New HTCondor Version Scheme

Starting with HTCondor 9.2.0, HTCondor will adopt a new version scheme to facilitate quicker patch releases. The version number will still retain the MAJOR.MINOR.PATCH form with slightly different meanings.

European HTCondor Workshop 2021 Next Week

The European HTCondor Workshop 2021 will be held next week, Monday Sept 20 through Friday Sept 24 as a purely online event via videoconference. It is still not too late to register - so if interested, please hurry up! Some slots for contributed presentations are still available. Please let us know about your projects, experience, plans and issues with HTCondor. It’s a way to give back to the community in return for what you received from it.

European HTCondor Workshop 2021 Registration Open

The European HTCondor Workshop 2021 will be held Monday Sept 20 through Friday Sept 24 as a purely online event via videoconference. The workshop is an opportunity for novice and experienced users of HTCondor to learn, get help and have exchanges between them and with the HTCondor developers and experts. This event is organized by the European community (and optimized for European timezones) but everyone worldwide is welcomed to attend and participate.

Plan to replace Grid Community Toolkit functionality

We are working to retire the use of the Grid Community Toolkit libraries (or GCT, which is a fork of the former Globus Toolkit) in the HTCondor Software Suite. Functionality provided by the GCT, such as GSI authentication, is rapidly being replaced by the use of IDTOKENS, SciTokens, and plain SSL within the ecosystem. Especially if your organization relies on GSI, please see this article on the htcondor-wiki that details our timeline and milestones for replacing GCT in HTCondor and the HTCondor-CE.

HTCondor Week 2021 Preliminary Schedule, Registration Reminder

HTCondor Week 2021 is just over three weeks away! We are pleased to announce a preliminary schedule: https://agenda.hep.wisc.edu/event/1579/timetable/#20210524.detailed This will likely have some adjustments before the actual event, but it should still give people an overall sense of what to expect. Also, a reminder that registration is free but mandatory. Only registered users will be sent the meeting URL and password. You can sign up from our website: https://agenda.hep.wisc.edu/event/1579/registrations/239/

HTCondor 8.9.12 no longer available

On March 26 2021 at 3:30pm the HTCondor v8.9.12 release was pulled down from our website after significant backwards-compatibility problems were discovered. We plan to fix these problems in v8.9.13 to be released by March 30. We apologize for any inconvenience.

Come find us on Twitter!

After a long social media absence, we’ve resuscitated our Twitter acount where we’ve been posting about HTCondor news, events, philosophy and other distributed systems related banter. Come find us and chime in! https://twitter.com/HTCondor

Campus Workshop on distributed high-throughput computing (dHTC) February 8-9, 2021

Save the date and register now for another Campus Workshop on distributed high-throughput computing (dHTC), February 8-9, 2021, offered by the Partnership to Advance Throughput computing (PATh). All campus cyberinfrastructure (CI) staff are invited to attend!

Feb 8: Training on Using and Facilitating the Use of dHTC and the Open Science Grid (OSG), 2-5pm ET. seats limited; register ASAP!

Feb 9: dHTC Virtual Office Hours with breakout rooms for discussion on all things dHTC, including OSG services for campuses and CC* awards, 2-5pm ET. unlimited seats

While there is no fee for either day, registration is required for participants to receive virtual meeting room details and instructions for training accounts via email. Please feel free to send any questions about the event to events@osg-htc.org.

National Science Foundation establishes a partnership to advance throughput computing

Recognizing the University of Wisconsin-Madison’s leadership role in research computing, the National Science Foundation announced this month that the Madison campus will be home to a five-year, 22.5 million dollar initiative to advance high-throughput computing. The Partnership to Advance Throughput Computing (PATh) is driven by the growing need for throughput computing across the entire spectrum of research institutions and disciplines. The partnership will advance computing technologies and extend adoption of these technologies by researchers and educators. Read the full article: https://morgridge.org/story/national-science-foundation-establishes-a-partnership-to-advance-throughput-computing/

A Community of Computers

Through the breakthrough concept of distributed computing, Miron Livny pioneered a way to harness massive amounts of computing and transformed the process of scientific discovery. Read the full article: https://ls.wisc.edu/news/a-community-of-computers

Miron Livny wins IEEE 2020 Outstanding Technical Achievement Award

The IEEE Computer Society Technical Committee on Distributed Processing (TCDP) has named Professor Miron Livny from University of Wisconsin as the recipient of the 2020 Outstanding Technical Achievement Award. See https://tc.computer.org/tcdp/awardrecipients/.

2020 IEEE TDCP ICDCS High Impact Paper Award

The initial HTCondor research paper from 1988, “Condor – A Hunter of Idle Workstations”, has won the 2020 IEEE TDCP ICDCS High Impact Paper Award. “To celebrate the 40 years anniversary of the International Conference on Distributed Systems (ICDCS), the IEEE Technical Committee on Distributed Processing (TCDP) Award Committee has selected ICDCS High Impact Papers that have profoundly influenced the field of distributed computing systems.” See https://tc.computer.org/tcdp/awardrecipients/

HTCondor-CE Virtual Office Hours (21 July 8-10am CDT)

On July 21, the CHTC will be hosting virtual office hours for the HTCondor-CE from 8-10am CDT. The HTCondor-CE software is a Compute Entrypoint (CE) based on HTCondor for sites that are part of a larger computing grid (http://htcondor-ce.org). During these office hours, we will be hosting a virtual room for general discussion with additional breakout rooms where you can meet with CHTC team members to discuss specific topics and questions. No appointment needed, just drop in! Office hour coordinates will be made available on the day of the event on this page: https://research.cs.wisc.edu/htcondor/office-hours/2020-07-21-HTCondorCE

Re-release of 8.9.5 deb Packages for Debian and Ubuntu

The HTCondor team is re-releasing the HTCondor 8.9.5 deb packages for Debian and Ubuntu. An error caused the Python bindings to be placed in the wrong directory, rendering them nonfunctional.

Univ of Manchester dynamically grows HTCondor in to Amazon cloud

A recently posted article at Amazon explains how the Univ of Manchester dynamically grows the size of their HTCondor pool with resources from AWS by using the HTCondor Annex tool.

One HTCondor pool delivers over an ExaFLOP hour cost-effectively for IceCube

The article “A cost effective ExaFLOP hour in the Clouds for IceCube” discusses how an existing HTCondor pool was augmented with resources dynamically acquired from AWS, Azure, Google, Open Science Grid, and on-prem resources to perform science for the IceCube project. The objective, on top of (obviously) the science output, was to demonstrate how much compute can someone integrate during a regular working day, using only the two most cost effective SKUs for each Cloud provider.

HTCondor Week 2020 Registration Open

We invite HTCondor users, administrators, and developers to HTCondor Week 2020, our annual HTCondor user conference, May 19-20, 2020. This will be a free, online event.

Using 50k GPUs in one HTCondor cloud-based pool

Read about how at SC19 UCSD got over 50k GPUs from the three largest Cloud providers, and integrated them in a single a HTCondor pool to run production science jobs. At peak, this cloud-based dynamic HTCondor cluster provided more than 90% of the performance of the #1 TOP100 HPC supercomputer.

HTCondor CREAM-CE Support

The CREAM working group has recently announced official support for the CREAM-CE will cease in December 2020. We are soliciting feedback on the HTCondor and Open Science Grid (OSG) transition plan. Please see this post to the htcondor-users email list for more information, and please email any concerns to htcondor-admin@cs.wisc.edu and/or help@opensciencegrid.org.

Register soon for the 2018 European HTCondor workshop!

The 2018 European HTCondor Workshop will be held Tuesday Sept 4 through Friday Sept 7, 2018, at the Rutherford Appleton Laboratory in Oxfordshire, UK. This is a chance to find out more about HTCondor from the developers, and also to provide feedback and learn from other HTCondor users. Participation is open to all organizations and persons interested in HTCondor. <p>The registration deadline is Tuesday 21 Aug – register here (this web site also has much more information about the conference). You will receive a discount if you register by July 31. <p>If you’re planning to attend, please consider speaking – we’d like to hear about your project and how you are using HTCondor. Abstracts are accepted via the conference page linked above; follow the “Call for Abstracts” link and select “Submit new abstract”.

HTCondor powers Marshfield Clinic project on disease genetics

This aritcle describes how HTCondor delivered more than 9 million CPU hours to help understand the genetics of human disease.

HTCondor Week 2018 Registration Open

We invite HTCondor users, administrators, and developers to HTCondor Week 2018, our annual HTCondor user conference, in beautiful Madison, Wisconsin, May 21-24, 2018. HTCondor Week features tutorials and talks from HTCondor developers, administrators, and users. It also provides an opportunity for one-on-one or small group collaborations throughout the week. In addition, the HEPiX Spring 2018 Workshop will also take place in Madison the preceding week.

NCSA calls on HTCondor partnership to process data for the Dark Energy Survey

HPCWire recently published an aritcle on how HTCondor enables NCSA to take raw data from the Dark Energy camera telescope and process and disseminate the results within hours of observations occurring.

HTCondor role in Nobel-winning gravitational wave discovery

The Laser Interferometer Gravitational-Wave Observatory (LIGO) unlocked the final door to Albert Einstein’s Theory of Relativity, winning the Nobel prize this week and proving that gravitational waves produce ripples through space and time. Since 2004, HTCondor has been a core part of the data analysis effort. See this recent article , as well as this one, for more details.

Intel and Broad Institute identify HTCondor for Genomics Research

Researchers and software engineers at the Intel-Broad Center for Genomic Data Engineering build, optimize, and widely share new tools and infrastructure that will help scientists integrate and process genomic data. The project is optimizing best practices in hardware and software for genome analytics to make it possible to combine and use research data sets that reside on private, public, and hybrid clouds, and have recently identified HTCondor on their web site as an open source framework well suited for genomics analytics.

Updated EL7 RPMs for HTCondor 8.4.12, 8.6.5, and 8.7.2

The HTCondor team has released updated RPMs for HTCondor versions 8.4.12, 8.6.5, and 8.7.2 running on Enterprise Linux 7. In the recent Red Hat 7.4 release, the updated SELinux targeted policy package prevented HTCondor’s SELinux policy module from loading. Red Hat Enterprise Linux 7.4 systems running with SELinux enabled will require this update for HTCondor to function properly.

Register soon for the European HTCondor workshop!

The 2017 European HTCondor workshop will be held at DESY in Hamburg from Tuesday 6 June through Friday 9 June. This is a chance to find out more about HTCondor from the developers, and also to provide feedback and learn from other HTCondor users. Participation is open to all organizations and persons interested in HTCondor. <p>The registration deadline is Tuesday 30 May – register here (this web site also has much more information about the conference). <p>If you’re planning to attend, please consider speaking – we’d like to hear about your project and how you are using HTCondor. Abstracts will be accepted through Friday 26 May via the conference page linked above; follow the “Call for Abstracts” link and select “Submit new abstract”.

Updated HTCondor Week schedule available; reception Wednesday

An updated version of the full HTCondor Week schedule is now available. You can access the schedules for each day from the HTCondor Week page. Hopefully this will be quite close to the final schedule. <p>Note that there will be a reception sponsored by Cycle Computing on Wednesday from 6-7pm. You can see the details at the Wednesday schedule page. <p>Please remember that the registration deadline for HTCondor Week is Tuesday, April 25 (register here).

Preliminary HTCondor Week schedule available

A preliminary verson of the full HTCondor Week schedule is now available. You can access the schedules for each day from the HTCondor Week page. Please remember that the registration deadline for HTCondor Week is Tuesday, April 25 (register here).

HTCondor Week registration deadline extended

The registration deadling for HTCondor Week has been extended until Tuesday, April 25. You can register here. Also, we anticipate posting a detailed schedule within the next day or two.

Register soon for HTCondor Week -- hotel block expires this week!

Monday, April 17 is the last day to register for HTCondor week. If you’re planning to attend but haven’t yet registered, please do so as soon as possible at the registration page. A few other notes: <ul> <li>We’ve added a preliminary list of talks and tutorials to the overview page and started on a preliminary schedule for Tuesday. <li>The hotel room block at the DoubleTree expires this Friday, April 7 (see the local arrangements page for details. Note that the room block at the Fluno Center has now expired. <li>We are still looking for more speakers. If you are interested in presenting, please email us at htcondor-week@cs.wisc.edu (see the speaker information page). </ul>

Register for upcoming HTCondor meetings!

Registration is now open for both HTCondor Week 2017 (held in Madison, Wisconsin, USA) and the 2017 European HTCondor workshop (held in Hamburg, Germany). The registration deadline for HTCondor Week is April 17; the registration deadline for the European HTCondor workshop is May 30. If you’re planning to attend, please register as soon as possible.

HTCondor Week 2017 registration open

Registration is now open for HTCondor Week 2017. The registration deadline is April 17, 2017.

HTCondor Week 2017 web page available

The HTCondor Week 2017 web page is now available. This web page includes information about nearby hotel options (note that HTCondor Week 2017 will be held at a different location than the last few HTCondor Weeks, so that may affect your hotel choice). Registration should be open by the end of February; at this time we anticipate a registration fee of $85/day.

HTCondor used by Google and Fermilab for a 160k-core cluster

At SC16, the HTCondor Team, Google, and Fermilab demonstrated a 160k-core cloud-based elastic compute cluster. This cluster uses resources from the Google Cloud Platform provisioned and managed by HTCondor as part of Fermilab’s HEPCloud facility. The following article gives more information on this compute cluster, and discusses how the bursty nature of computational demands is making the use of cloud resources increasingly important for scientific computing. Find out more information about the Google Cloud Platform here.

European HTCondor workshop scheduled for June 6-9, 2017

The 2017 European HTCondor workshop will be held Tuesday, June 6 through Friday, June 9, 2017 at DESY in Hamburg, Germany. We will provide more details as they become available.

HTCondor helps with GIS analysis

This article explains how the Clemson Center for Geospatial Technologies (CCGT) was able to use HTCondor to help a student analyze large amounts of GIS (Geographic Information System) data. The article contains a good explanation of how the data was divided up in such a way as to allow it to be processed using an HTCondor pool. Using HTCondor allowed the data to be analyzed in approximately 3 hours, as opposed to the 4.11 days it would have taken on a single computer.

HTCondor Week 2017 scheduled for May 2-5

HTCondor Week 2017 will be held Tuesday, May 2 through Friday, May 5, 2017 at the Fluno Center on the University of Wisconsin- Madison campus. We will provide more details as they become available.

HTCondor Week tutorials free to UW-Madison faculty, staff and students

Just a reminder that the Tuesday tutorials at HTCondor Week are free to UW-Madison faculty, staff and students. If you have any interest in using HTCondor and Center for High Throughput Computing resources, we’d love to see you on Tuesday. <p>However, as everyone knows, there ain’t no such thing as a free lunch – people who attend the tutorials without paying are not eligible for the lunch (you can still get snacks at the breaks, though).

Why do supercomputers have to be so big?

In this Blue Sky Science article Lauren Michael of the Center for High Throughput Computing answers a question from an eight-year-old: Why do supercomputers have to be so big? The article can also be viewed at the Wisconsin State Journal and the Baraboo News Republic.

HTCondor Week registration deadline is May 9

The registration deadline for HTCondor Week 2016 has been extended to Monday, May 9. This will be the final extension – we need to finalize attendance numbers for the caterers. <p>Also note that Wednesday, April 27, is the last day to be guaranteed to get the conference rate at the DoubleTree Hotel.

Large Hadron Collider experiment uses HTCondor and Amazon Web Services to probe nature

This Amazon Web services blog post explains how scientists at Fermilab (a Tier 1 data center for the CMS experiment at the LHC) use HTCondor and Amazon Web Services to elastically adapt their computational capacity to changing requirements. Fermilab added 58,000 cores with HTCondor and AWS, allowing them to simulate 500 million events in 10 days using 2.9 million jobs. Adding cores dynamically improves cost efficiency by provisioning resources only when they are needed.

Join us at HTCondor Week 2016!

We want to invite you to HTCondor Week 2016, our annual HTCondor user conference, in beautiful Madison, Wisconsin, May 17-20, 2016. We will again host HTCondor Week at the Wisconsin Institutes for Discovery, a state-of-the-art facility for academic and private research specifically designed to foster private and public collaboration. It provides HTCondor Week attendees with a compelling environment in which to attend tutorials and talks from HTCondor developers and users like you. It also provides many comfortable spaces for one-on-one or small group collaborations throughout the week.

Our current development series, 8.5, is well underway toward our upcoming production release. When you attend, you will learn how to take advantage of the latest features. You'll also get a peek into our longer term development plans--something you can only get at HTCondor Week!

We will have a variety of in-depth tutorials and talks where you can not only learn more about HTCondor, but you can also learn how other people are using and deploying HTCondor. Best of all, you can establish contacts and learn best practices from people in industry, government, and academia who are using HTCondor to solve hard problems, many of which may be similar to those you are facing.

Speaking of learning from the community, we'd love to have you give a talk at HTCondor Week. Talks are 15-20 minutes long and are a great way share your ideas and get feedback from the community. If you have a compelling use of HTCondor you'd like to share, see our speaker's page.

You can register, get hotel details, and see the agenda overview on the HTCondor Week 2016 site.

HTCondor was critical to the discovery of Einsteins's gravitational waves at LIGO

This New Universe Daily news article discusses the collaboration between the HTCondor team at UW-Madison and the LIGO team at UW-Milwaukee, and how the HTCondor software was critical to the discovery of gravitational waves and will continue to be vital as LIGO moves forward. “For 20 years, LIGO was trying to find a needle in a haystack. Now we’re going to build a needle detection factory,” said Peter Couvares, a Senior Scientist with the LIGO project.

HTCondor helps LIGO confirm last unproven Albert Einstein theory

This Morgridge Institute news article explains the rich back-story of HTCondor’s role behind the recent announcement that scientists from the Laser Interferometer Gravitational-Wave Observatory (LIGO) unlocked the final door to Einstein’s Theory of Relativity. More than 700 LIGO scientists have used HTCondor to run complex data analysis workflows, accumulating 50 million core-hours in the past six months alone.

OSG computational power helps solve 30-year old protein puzzle

This Open Science Grid news article discusses how the Baker Lab at the University of Washington has used HTCondor and the OSG to successfully simulate the cylindrical TIM-barrel (triosephosphate isomerase-barrel) protein fold, which has been a challenge for nearly 30 years. TIM-barrel protein folds occur widely in enzymes, meaning that understanding them is important for applications such as the development of new vaccines. The Baker Lab performed about 2.4 million core hours of computation on the OSG in 2015.

ATLAS and BNL Bring Amazon EC2 Online

This Open Science Grid news article discusses how a pilot project at the RHIC/ATLAS Computing Facility (RACF) at Brookhaven National Laboratory has used HTCondor-G to incorporate virtual machines from Amazon’s Amazon’s Elastic Compute Cloud (EC2) spot market into a scientific computation platform. The ATLAS experiment is moving towards using commercial clouds for computation as budget constraints make maintaining dedicated data centers more difficult.

HTCondor helps to find gravitational waves

This Open Science Grid news article discusses the role of HTCondor, Pegasus and the Open Science Grid in the recently-announced discovery of gravitational waves by the Laser Interferometer Gravitational-Wave Observatory (LIGO). LIGO used a single HTCondor-based system to run computations across LIGO Data Grid, OSG and Extreme Science and Engineering Discovery Environment (XSEDE)-based resources, and consumed 3,956,910 compute hours on OSG.

Registration open for Barcelona Workshop for HTCondor / ARC CE

REGISTRATION IS NOW OPEN (until 22nd Feb.)!! The workshop fee is 80 EUROS (VAT included), which covers the lunches and all coffee breaks along the event. The list of recommended hotels, instructions for fee payment, and how to get to the venue is available in the workshop homepage. <ul> <li>Where: Barcelona, Spain, at the ALBA Synchrotron Facility <li>When: Monday February 29 2016 through Friday March 4 2016. <li>Workshop homepage: https://indico.cern.ch/e/Spring2016HTCondorWorkshop </ul>

Re-release of RPMs for HTCondor 8.4.3 and 8.5.1

The HTCondor team is re-releasing the RPMs for 8.4.3 and 8.5.1. A recent change to correct problems with Standard Universe in the RPM packaging resulted in unoptimized binaries to be packaged. The new RPMs have optimized binaries.

HTCondor / ARC CE Workshop: 29 February 2016 to 4 March 2016

There will be a workshop for HTCondor and ARC CE users in Barcelona, Spain on Feb 29 2016 — March 4 2016

- Where: Barcelona, Spain, at the ALBA Synchrotron Facility

- When: Monday February 29 2016 through Friday March 4 2016.

- Workshop homepage: https://indico.cern.ch/e/Spring2016HTCondorWorkshop </ul>

Save the dates! The HTCondor team, the NorduGrid collaboration, and the Port d'Informació. Cientifica (PIC) + ALBA Synchrotron present a workshop for the users and administrators of HTCondor, the HTCondor CE and the ARC CE to learn and connect in Barcelona, Spain. This is an opportunity for novice and experienced system administrators and users to learn, get help and have exchanges between themselves and with the developers and experts.

The workshop will offer:

- Introductory tutorials on using and administrating HTCondor, HTCondor CE and ARC CE

- Technical talks on usage and deployment from developers and your fellow users

- Talks and tutorials on recent features, configuration, and roadmap

- The opportunity to meet with HTCondor developers, ARC CE developers, and other experts for non-structured office hours consultancy </ul>

Speaking of learning from the community, we would like to hear from people interested in presenting at this workshop. If you have a use case or best practices involving HTCondor, HTCondor CE or ARC CE you'd like to share, please send us an email at hepix-condorworkshop2016-interest (at) cern (dot) ch .

Information on registration will be available soon at: https://indico.cern.ch/e/Spring2016HTCondorWorkshop

Timetable overview: Monday, Tuesday, and Wednesday presentations will be dedicated to HTCondor and HTCondor CE, while Thursday's presentations dedicated to ARC CE. HTCondor and ARC CE developers and experts will be available on Thursday and Friday morning for non-structured "office hours" consultancy and individual discussions with users.

We hope to see you in Barcelona!

HTCondor helps astronomers with the hydrogen location problem

This UW Madison news article discusses how a new computational approach permits evaluation of hydrogen data using software, which may replace the time consuming manual approach. Putting HTCondor into the mix scales well given the vast quantities of data expected as Square Kilometer Array radio telescope is realized.

HTCondor Week 2015 Registration Open

We invite HTCondor users, administrators, and developers to HTCondor Week 2015, our annual HTCondor user conference, in beautiful Madison, Wisconsin, May 19-22, 2015. HTCondor Week features tutorials and talks from HTCondor developers, administrators, and users. It also provides an opportunity for one-on-one or small group collaborations throughout the week.

HTCondor Week 2015 announced!

HTCondor Week 2015 is May 19–22, 2015 in Madison, Wisconsin. Join other users, administrators, and deveopers for the opportunity to exchange ideas and experiences, to learn about the latest research, to experience live demos, and to influence our short and long term research and development directions.

HTCondor assisted the paleobiology application of DeepDive

This UW Madison news article describes the paleobiology application of the DeepDive system, which used text-mining techniques to extract data from publications and build a database. The quality of the database contents equaled that achieved by scientists. HTCondor helped to provide the million hours of compute time needed to build the database from tens of thousands of publications.

Registration open for European HTCondor Pool Admin Workshop: Dec. 8th - Dec. 11th, 2014

The HTCondor team, together with Worldwide LHC Computing Grid Deployment Board, is offering a four-day workshop the 8th through the 11th of December at CERN in Geneva, Switzerland, for HTCondor pool administrators. Topics envisioned include pool configuration, management, theory of operation, sharing of best practices and experiences, and monitoring of individual HTCondor batch pools. Special subjects of emphasis may include: scheduling, pool policy selection, performance tuning, debugging failures, and previews of upcoming features of HTCondor. Time will be provided for question and answer sessions. Attendees should have some knowledge of Linux and network administration, but no HTCondor experience will be required. See the workshop’s web site to register and for more details.

Videos from HTCondor Week 2014 available online

The HTCondor team is pleased to announce that many videos from HTCondor Week 2014 are now available for streaming and download. These include an introduction to using HTCondor, introductory and advanced tutorials for DAGMan, and an introduction to administrating HTCondor.

European HTCondor Pool Admin Workshop: Dec. 8th - Dec. 11th

The HTCondor team, together with Worldwide LHC Computing Grid Deployment Board, plans to offer a two-day workshop the 8th and 9th of December at CERN in Geneva, Switzerland. HTCondor experts will also be available at CERN on Dec 10th and 11th for less structured one-on-one interaction and problem-solving. This workshop will provide lectures and presentations aimed towards both new and experienced administrators of HTCondor pools setup to manage local compute clusters. See the workshop’s web site for more details.

Novartis uses HTCondor to further cancer drug design.

In this Novartis presentation at an Amazon Web Services Summit is a description of the problem and its solution, in which Cycle Computing and HTCondor enable the scheduling of 10,600 Amazon EC2 spot instances.

OpenSSL vulnerability

HTCondor users who are using the SSL or GSI authentication methods, or submitting grid universe jobs may be vulnerable. Please see the post on the HTCondor-users mailing list.

Consider presenting your work at HTCondor Week 2014

HTCondor Week attendees are interested in hearing about your efforts during our annual meeting, April 28-30. Please consider presenting. Details for adding your talk to the schedule are given in this page of Information for HTCondor Week Speakers.

HTCondor Week 2014 Announced: April 28-30

HTCondor Week 2014, our annual HTCondor user conference, is scheduled for April 28-April 30, 2014. We will again host HTCondor Week at the Wisconsin Institutes for Discovery in beautiful Madison, Wisconsin.

In a change from previous years, technical talks will begin on Monday. See the web site for current details.

At HTCondor Week, you can look forward to:

- Technical talks on usage and deployment from developers and your fellow users

- Talks and tutorials on new HTCondor features

- Talks on future plans for HTCondor

- Introductory tutorials on using and administrating HTCondor

- The opportunity to meet with HTCondor developers and other users

Information on registration and scheduling will be available soon.

HTCondor assists in Einstein@Home discovery of a new radio pulsar

We add our congratulations to James Drews of the University of Wisconsin - Madison for the discovery of J1859+03, a new radio pulsar, within data from the Arecibo Observatory PALFA survey. James backfills the Computer Aided Engineering HTCondor pool with the Einstein@Home boinc client.

Interview with Miron Livny

In this UW-Madison news story, Miron Livny offers perspective on finding the Higgs boson particle and the computational collaboration that supported this and other scientific research.

HTCondor is a hero of software engineering

as reported by Ian Cottam in his blog for the Software Sustainability Institute at the University of Manchester. Ian appreciates that HTCondor is easy to install, runs on many platforms, and contains features such as DAGMan to order the execution of sets of jobs, making the system even more useful.

Success stories enhanced by HTCondor in the area of brain research

International Science Grid This Week (ISGTW) reports on two research efforts in the area of brain research. The work of Ned Kalin is highlighted in this article on brain circuitry and mechanisms underlying anxiety. And, high throughput computing permits the research of Mike Koenigs in psychopathy to analyze data in days, when without HTC, it might take years.

Miron Livny receives the HPDC 2013 Achievement Award

Miron Livny, Director of the UW-Madison Center for High Throughput Computing and founder of the HTCondor workload management system, has been honored with the 2013 High Performance Parallel and Distributed Computing (HPDC) Achievement Award.

Open Science Grid releases Bosco 1.2, based on HTCondor

Bosco is a client for Linux and Mac operating systems for submitting jobs to remote batch systems without administrator assistance. It is designed for end-users, and only requires ssh access to one or more cluster front-ends. Target clusters can be HTCondor, LSF, PBS, SGE or SLURM managed resources. The new Bosco 1.2 release is much easier to install, will handle more jobs, will send clearer error messages, and makes it easier to specify the memory you need inside the clusters you connect to.

HPCwire article highlights running stochastic models on HTCondor

An article published at HPCwire highlights research out of Brigham Young University with a goal to demonstrate an alternative model to High Performance Computing (HPC) for water resource stakeholders by leveraging High Throughput Computing (HTC) with HTCondor.

Atlas project at CERN describes their computing environment

The HPCwire: CERN, Google Drive Future of Global Science Initiatives article describes the computing environment of the ATLAS project at CERN. HTCondor and now Google Compute Engine aid the extensive collision analysis effort for ATLAS.

HTC deals with big data

Contributor Miha Ahronovitz traces the history of high throughput computing (HTC), noting the particularly enthusiastic response from the high energy physics world and the role of HTC in such important discoveries as the Higgs boson. As one of the biggest generators of data, this community has been dealing with the “big data” deluge long before “big data” assumed its position as the buzzword du jour. Read more at HPC In the Cloud.

Paradyn/HTCondor Week 2013 registration open

We want to invite you to HTCondor Week 2013 , our annual HTCondor user conference, in beautiful Madison, WI April 29-May 3, 2013. (HTCondor Week was formerly named Condor Week, matching a name change for the software.) We will again host HTCondor Week at the Wisconsin Institutes for Discovery, a state of the art facility for academic and private research specifically designed to foster private and public collaboration. It provides HTCondor Week attendees a compelling environment to attend tutorials and talks from HTCondor developers and users like you. It also provides many comfortable spaces for one-on-one or small group collaborations throughout the week. This year we continue our partnership with the Paradyn Tools Project, making this year Paradyn/HTCondor Week 2013. There will be a full slate of tutorials and talk for both HTCondor and Paradyn.

Our current development series, 7.9, is well underway toward our upcoming production release. When you attend, you will learn how to take advantage of the latest features such as per-job PID namespaces, cgroup enforced resource limits, Python bindings, CPU affinity, BOSCO for submitting jobs to remote batch systems without administrator assistance, EC2 spot instance support, and a variety of speed and memory optimizations. You'll also get a peek into our longer term development plans--something you can only get at HTCondor Week!

We will have a variety of in-depth tutorials, talks, and panels where you can not only learn more about HTCondor, but you can also learn how other people are using and deploying HTCondor. Best of all you can establish contacts and learn best practices from people in industry, government and academia who are using HTCondor to solve hard problems, many of which may be similar to those facing you.

Speaking of learning from the community, we'd love to have you give a talk at HTCondor Week. Talks are 20 minutes long and are a great way share your ideas and get feedback from the community. If you have a compelling use of HTCondor you'd like to share, let Alan De Smet know (adesmet@cs.wisc.edu) and he'll help you out. More information on speaking at HTCondor Week is available at the HTCondor Week web site.

You can register, get the hotel details and see the agenda overview on the HTCondor Week 2013 site. See you soon in Madison!

\"Condor\" name changing to \"HTCondor\"

In order to resolve a lawsuit challenging the University of Wisconsin-Madison’s use of the “Condor” trademark, the University has agreed to begin referring to its Condor software as “HTCondor” (pronounced “aitch-tee-condor”). The letters at the start of the new name (“HT”) derive from the software’s primary objective: to enable high throughput computing, often abbreviated as HTC. Starting in the end of October and through November of this year, you will begin to see this change reflected on our web site, documentation, web URLs, email lists, and wiki. While the name of the software is changing, nothing about the naming or usage of the command-line tools, APIs, environment variables, or source code will change. Portals, procedures, scripts, gateways, and other code built on top of the Condor software should not have to change at all when HTCondor is installed.

Condor Security Release: 7.8.6

The Condor Team is pleased to announce the release of Condor 7.8.6. which contains an important security fix that was incorrectly documented as being in the 7.8.5 release. This release is otherwise identical to the 7.8.5 release. Affected users should upgrade as soon as possible. More details on the security issue can be found here. Condor binaries and source code are available from our Downloads page.

Red Hat releases Enterprise MRG 2.2

This Red Hat press release describes updates in the new 2.2 release. New features increase security and expand cloud readiness with jobs that can be submitted to use the Condor Deltacloud API.

Condor Security Release: 7.6.9

Regrettably, due to an error in building 7.6.8, it is not a valid release and has been pulled from the web. Please update to version 7.6.9 or 7.8.2 instead to address the security issue posted yesterday. More details on the security problem can be found here, and Condor binaries and source code are available from our Downloads page.

Condor Security Releases: 7.8.2 and 7.6.8

The Condor Team is pleased to announce the release of Condor 7.8.2 and Condor 7.6.8, which fix an important security issue. All users should upgrade as soon as possible. More details on the security problem can be found here, and Condor binaries and source code are available from our Downloads page.

Condor contributes to LHC and research efforts benefit

This UW-Madison news release describes the collaboration and contribution of Condor to the computing efforts that support research efforts across the globe.

DOE and NSF award $27 million to OSG

This Department of Energy Office of Science and the National Science Foundation award to the OSG will extend the reach of OSG capabilities that support research with computing power and data storage, as detailed in this UW-Madison news release. Condor is a significant component in the distributed high-throughput OSG middleware stack.

50,000-Core Condor cluster provisioned by Cycle Computing

for the Schroedinger Drug Discovery Applications Group. See the article in Hostingtecnews.com, or the article in BioIT World. And, Condor helped enable this research.

Two Condor papers selected as most influential in HPDC

Two papers by the Condor Team were selected as to represent the most influential papers in the history of The International ACM Symposium on High-Performance Parallel and Distributed Computing (HPDC). Details…

Congratulations to Victor Ruotti

computational biologist at the Morgridge Institute for Research, winner of the CycleCloud BigScience Challenge 2011. Read more about both the challenge and the finalists in the Cycle Computing press release.

Condor in EC2 now supported by Red Hat

Red Hat Enterprise MRG support expands into the cloud as announced in this press release, by leveraging the EC2 job universe in Condor, and by maintaining supported images of Red Hat Enterprise Linux with Condor pre-installed on Amazon storage services. Red Hat MRG can schedule local grids, remote grids, virtual machines for internal clouds, and now, rented cloud infrastructure. There is also documentation of this feature.

Red Hat announces the release of Red Hat Enterprise MRG 2.1,

offering increased performance, reliability, interoperability, as presented in this news release.

Upgrades recommended

Please upgrade to the latest versions of the stable and development series. There have been many important bug fixes in both, including a fix for a possible Denial Of Service attack from trusted users. (Details here)

Center for High Throughput Computing (CHTC) becomes first Red Hat Center of Excellence Development Partner

Red Hat announced that it has expanded its technology partnership with the University of Wisconsin-Madison (UW-Madison) to establish the Center for High Throughput Computing (CHTC) as the first Red Hat Center of Excellence Development Partner. In addition, Red Hat has awarded the UW-Madison CHTC its Red Hat Cloud Leadership Award for its advancements in cloud computing based on the open source Condor project and Red Hat technologies. See the Red Hat press release for more information.

Cycle Computing uses Condor to create 10,000-core cluster in Amazon's EC2

Cycle Computing used Condor to create a 10,000-core cluster on top of Amazon EC2. The virtual cluster was used for 8 hours to provide 80,000 hours of compute time for protein analysis work for Genentech. “The 10,000-core cluster that Cycle Computing set up and ran for eight hours on behalf of Genentech would have ranked at 114 on the Top 500 computing list from last November (the most current ranking), so it was not exactly a toy even if the cluster was ephemeral.”

Purdue researchers analyze Wikipedia using Condor, DAGMan, and TeraGrid

Dr. Sorin Adam Matei and hi team are using Condor and DAGMan on TeraGrid to study Wikipedia. They are studying how collaborative, network-driven projects organize and function. Condor allowed them to harness approximately 4,800 compute hours in one day, processing 4 terabytes of information.

Red Hat has announces the release of Enterprise MRG Grid 1.3

The Red Hat news release details the release of Enterprise MRG Grid 1.3, its grid product based on Condor. The release includes new administrative and user tools, Windows execute node support, enhanced workflow management, improved scheduling capabilities, centralized configuration management, and new virtual machine and cloud spill-over features. MRG Grid is also now fully supported for customers in North America and extended coverage is provided to customers throughout Europe. With MRG Grid 1.3, customers will gain the ability to scale to tens of thousands of devices, manage their grid in a centralized fashion, be able to provision virtual machines for grid jobs, and connect their grid to private and public clouds.

Announcing Condor Day Japan

The first Condor Day Japan workshop will be held on November 4, 2010 in Akihabara, Tokyo. This one day workshop will give collaborators and users the chance to exchange ideas and experiences, learn about latest research, experience live demos, and influence short and long term research and development directions. The submission deadline is October 18, 2010. Please send inquiries to condor-days@m.aist.go.jp .

Purdue's Condor-based DiaGrid manages power as well

ZDNet’s GreenTech Pastures blog reports that Purdue’s Condor-based DiaGrid helps them maximize utilization for electricity consumed. DiaGrid manages 28,000 processors across three states.

Cardiff University credits Condor with reducing energy bills

This Joint Information Systems Committee (JISC) September 2009 article “Energy Efficiency for High-Powered Research Computing” shows the quantity of research computing that can be completed while still saving power.

Open Source Energy Savings

The article “Open Source Energy Savings” at Forbes.com discusses Condor’s green computing work.

Condor cluster helps to evolve naval strategy in Singapore

Researchers at Singapore’s national defense R&D organization, DSO National Labs, run evolutionary algorithms on their Condor cluster to evaluate and adapt maritime force protection tactics. This paper describes computer-evolved strategies running on a Condor cluster, applying it to the defense of commercial shipping in the face of piracy.

Ordnance Survey Ireland Maps with Condor

Ordnance Survey Ireland deploys Condor as one of many technologies to build digital maps of Ireland.

Purdue's Condor-based DiaGraid named top 100 IT project

Purdue’s Condor-based DiaGrid was named a Top-100 IT project of 2009 by Network World. DiaGrid has 177 teraflops of capacity.

The Condor project will help with the workflows

generated for both data analysis and visualization efforts with XD award winners: University of Tennessee (see the press release) and the Texas Advanced Computing Center (TACC) at the University of Texas at Austin (as well as this press release). These TeraGrid eXtremeDigital Resource (XD) awards from the NSF will enable a center for Remote Data Analysis and Visualization (RDAV) research.

Red Hat CEO Jim Whitehurst singles out Condor

as code that adds value, in this eWeek.com article about Red Hat’s innovative business model.

Condor supports DiaGrid at Purdue University,

a recipient of a Campus Technology Innovators Award. This Campus Technology article describes each of the award winners. Congratulations to Purdue and all contributors to DiaGrid!

International Summer School on Grid Computing 2009

The International Summer School on Grid Computing 2009, will be in July 2009 in Sophia Antipolis, Nice, France. It’s a two-week hands-on summer school course in grid technologies. You’ll get a chance to learn a lot of in-depth material not only about various grid technologies, but about the principles that underlie them and connect them together. The school will include talks from well-known grid experts in deploying, using, and developing grids. Hands-on laboratory exercises will give participants experience with widely used grid middleware. More Information

NCSA reports on the Vulnerability Assessment project

This NCSA news release highlights the work of Jim Kupsch, working for the SDSC and the UW-Madison Vulnerability Assessment project. The project performs independent security audits of grid computing codes such as Condor and MyProxy.

CXOtoday.com overview of Condor

This CXOtoday.com article gives an overview of Condor, noting that Condor scales from small to large use cases.

Condor helps Purdue and IU work together

This HPCwire article presents Indiana University’s entrance into Purdue’s national computing grid, Diagrid, which uses Condor.

UW scientists play key role in largest physics experiment to date

An article at The Capital Times briefly mentions Professor Livny and the Condor Project’s work with the Large Hadron Collider. On Professor Livny’s work, Associate Dean Terry Millar said, “The computational contributions from UW are pretty profound.”

Pegasus to support work at the National Human Genome Research Institute

The NSF funded Pegasus Workflow Management System, created by researchers at University of Southern California Information Sciences Institute (ISI) in collaboration with the Condor Project, has been chosen to support workflow-based analysis for the coordinating center that links genomic and epidemiologic studies. More information has been released by ISI.

European Condor Week 2008

European Condor Week 2008 will take place 21-24 October in Barcelona, Spain, and will be hosted by Universitat Autonoma de Barcelona. Check out the European Condor Week 2008 web page for more information. If you are interested in giving a talk and sharing your experiences please email EUCondorWeek@caos.uab.es.

Condor Week 2008 Videos

Videos for five of the tutorials given at Condor Week 2008 are now available for download. The tutorials are: Using Condor: An Introduction, Administrating Condor, Condor Administrator’s How-to, Virtual Machines in Condor, and Building and Modifying Condor.

Martin Banks editorial: "The Parallel Bridge"

This ITPRO editorial discusses HTC as the future for parallel business and commerce calculations, and references a collaboration between IBM labs and the Condor Project to bring HTC to the Blue Gene supercomputer.

Nature publishes virus views enabled using Purdue's Condor flock

As published in the Feb 28th issue of Nature, a team led by a Purdue University researcher has achieved images of a virus in detail two times greater than had previously been achieved. This breakthrough was enabled through the use of Purdue’s Condor distributed computing grid, which comprises more than 7,000 computers.

Milwaukee Institute seeks to build computational power

Private sector leaders in Milwaukee and southeastern Wisconsin are trying to bridge the gap between universities and businesses through more effective use of computing and scientific resources, and their vehicle is the new Milwaukee Institute, a non-profit organization that is building a cyber infrastructure of shared, grid-based computing that leverages Condor Project technology.

Red Hat Enterprise MRG distributed computing platform announced

Today Red Hat announced Red Hat Enterprise MRG, a distributed computing platform offering that utilizes Condor for workload management. “The University of Wisconsin is pleased to work with Red Hat around the Condor project,” said Terry Millar, Associate Dean at the UW-Madison Graduate School.

Condor-G and GT4 Tutorial

Jan Ploski offers this tutorial documentation for Condor-G GT4 administrators and end users (those submitting jobs).

Purdue University becomes an HPC-Ops center

As described in the HPCwire press release, Purdue University is to become one of five High Performance Computing Operations (HPC-Ops) centers within the NSF-funded Teragrid project. Purdue has the largest academic Condor pool in the world, which provides computing cycles to Teragrid.

Clemson corrals idle computers

“When all the Clemson University students are tucked in for the night, hundreds of desktops across the campus are turned on to create a supercomputing grid that can rapidly process large amounts of data.” “Her request for a series of computations that would have taken 10 years on a regular desktop computer was completed in just a few days.”

Clemson University upgrades to supercomputer

GreenvilleOnline.com reports, “Condor and the new Palmetto Cluster enable Clemson faculty to do faster and deeper research. The technology opens doors to a realm where no Clemson researcher could go before – and research grants dollars not accessible without the new supercomputing power.”

Serious bug in v6.9.4 impacts standard universe jobs

A bug introduced in the most recent developer release, Condor v6.9.4, can cause jobs running in Condor’s Standard Universe to write corrupt data. The Condor Team has written a patch that will be included in v6.9.5 which is forthcoming; if you need the patch sooner, please contact us. This bug only impacts jobs that write binary (non-ASCII) files and are submitted to the Standard Universe.

High Throughput Computing Week

High Throughput Computing Week in Edinburgh is a four-day event in November 2007 that will discuss several aspects of high throughput computing (HTC), including transforming a task so it can benefit from HTC and choosing technologies to deliver HTC, as well as trying some HTC systems in-person. Condor will be one of the four technologies discussed. More Details

Cardiff supplies UK National Grid Service with first Windows Condor Pool

Cardiff University announces the availability of a 1,000 processor Condor Pool to the UK National Grid Service. “Use by the University’s researchers has grown considerably in this time and has saved local researchers years of time in processing their results.” “Using Gasbor to build a model of a typical Tropoelastin molecule takes 30 hours. Using Condor the same simulation ran in just two hours.”

More secure configuration for PostgreSQL and Quill documented

When Quill was first developed, it was designed to work with older versions of the PostgreSQL database server. Newer versions of PostgreSQL have stronger security features, which can be enabled in the PostgreSQL configuration, requiring no changes to the Quill daemon. We recommend that all Quill sites upgrade to the latest version of PostgreSQL (8.2), and make these easy changes to their PostgreSQL configuration. The consequences of not doing so mean that any user who can sniff the network between the Quill daemon and the PostgreSQL server can obtain the Quill database password, and make changes to the Quill database. This can change the output of condor_q and condor_history, but cannot otherwise impact Condor’s correctness or security. Otherwise unauthorized users cannot use this database password to run jobs or mutate Condor’s configuration. A second problem with the previously recommended configuration was that any user with the publicly-available read-only Quill PostgreSQL password could create new tables in the database and store information there. While this does not effect the running of Condor in any way, sites may view that as a security problem. As of Condor 6.8.6 and 6.9.4, the Condor manual has been updated to describe the more secure installation of PostgreSQL, which remedy both of the above problems. These changes include the following: Change the authentication method (the final field) in the pg_hba.conf file from “password” to “md5”. Restarting PostgreSQL is then needed for this to take effect. Only allow the quillwriter account to create tables. To do this, run the following two SQL commands as the database owner. REVOKE CREATE on SCHEMA public FROM PUBLIC; GRANT CREATE on SCHEMA public to quillwriter;

Durham University Department of Geography flies with Condor

In 2006 the Durham University Department of Geography began examining ways of increasing the speed at which research results could be obtained from geophysical modelling simulations. After a period of testing lasting over 6 months, the Department of Geography has successfully implemented a distributed computing system built upon the Condor platform. Since its implementation, the Condor distributed computing network has provided a unique facility for spatial modelling which is particularly suited to Monte Carlo approaches. Thanks to the processing power offered by the Condor network, members of the Catchment, River and Hillslope Science (CRHS) group have developed methods of ultra-high resolution image processing and remote sensing which are pushing the traditional boundaries of ecological monitoring at both catchment and local scales.

Condor Week 2007 Concludes

After four days of presentations, tutorials, and discussion sessions, Condor Week 2007 came to a close. Red Hat presented plans to integrate Condor into Red Hat and Fedora distributions, and to provide enterprise-level support for Condor installations. Government labs reported results from their deployment efforts over the past year; for example, a production Condor installation at Brookhaven National Labs consisting of over 4800 machines ran 2.8 million jobs in the past 3 months, delivering 6.2 million wallclock hours to over 400 scientists. IBM reported on an ongoing project to bring Condor and Blue Gene technologies together, in order to enable High Throughput style computing on IBM’s popular Blue Gene supercomputer. The Condor Team reported on the scalability enhancements in the Condor 6.9 development series. Check out the 35+ presentations delivered this week.

BAE Systems incorporating Condor into their SOCET SET v5.4 release

BAE Systems produces geospatial exploitation tools. The GXP Mosaic News and Updates hints at using Condor for complex TFRD decompression and VQ compression tasks, as well as the multi-CPU processing of orthomosaics.

Condor helps Clemson University researchers

GRIDtoday reports in the article “Clemson Researchers Get Boost From Condor” that Clemson University has deployed a campus-wide computing grid built on Condor. “‘The Condor grid has enabled me to conduct my research, without a doubt,’ [Assistant Professor] Kurz said. ‘Before using the campus grid, I was completely without hope of completing the computational studies that my research required. As soon as I saw hundreds of my jobs running on the campus grid, I started sending love notes to the Condor team at Clemson.’”

International Summer School in Grid Computing

The fifth in the highly successful series of International Summer Schools in Grid Computing will be held at Gripsholmsviken Hotell & Konferens of Mariefred, Sweden, near Stockholm, from 8th to 20th July 2007. The school builds on the integrated curriculum developed over the last few years which brings together the leading grid technologies from around the world, presented by leading figures, and gives students a unique opportunity to study these technologies in depth side by side. More information

Condor Week 2007 Schedule posted

A first draft of the Condor Week 2007 schedule has been posted on the Condor/Paradyn Week 2007 page. The schedule has not been finalized, but it is close.

Condor used in search for alternate fuel sources

GRIDtoday reports in the article “Protein Wranglers” that NCSA researchers are using Condor-G to streamline their workflow. “They were spending quite a bit of time doing relatively trivial management tasks, such as moving data back and forth, or resubmitting failed jobs,’ says Kufrin. After gaining familiarity with the existing ‘human-managed’ tasks that are required to carry out lengthy, computationally intensive simulations of this nature, the team identified Condor-G, an existing, proven Grid-enabled implementation of Condor, as a possible solution.”

Purdue doubles the work life of computers with overtime

Purdue University distributes its computing jobs across the university using Condor. “At Purdue, we’re harvesting computer downtime and putting it to good use” says Gerry McCartney, Purdue’s interim vice president for information technology and chief information officer.

Condor in production at Banesto, a major bank in Spain

Banesto is Spain’s third largest bank in volume of managed resources, and caters to over 3,000,000 customers. With the help of Cediant, Banesto has installed a Condor cluster to replace the work previously performed by a monolithic SMP. As a result, the time it takes to evaluate each portfolio has been reduced by 75%. Read more in an announcement made on the condor-users email list.

Condor's role in "The Wild"

| You may have heard about the fantastic computer animation in "The Wild", a Disney film that appeared in theaters last Spring and was recently released on DVD. What you may not have heard is that Condor was used to assist with the tremendous effort of managing over 75 million renders. Read a nice letter we received from Leo Chan and Jason Stowe, film Technology Supervisor and Condor Lead, respectively. |

Linux Journal Magazine publishes article "Getting Started with Condor"

Author Irfan Habib wrote in Linux Journal magazine his experience getting started with Condor. He concludes, “Condor provides the unique possibility of using our current computing infrastructure and investments to target processing of jobs that are simply beyond the capabilities of our most powerful systems… Condor is not only a research toy, but also a piece of robust open-source software that solves real-world problems.”

UW-Madison plays a central role in "Open Science Grid" (OSG)

The National Science Foundation (NSF) and the Department of Energy’s (DOE) Office of Science announced today that they have joined forces to fund a five-year, million program to operate and expand upon the two-year-old national grid. This project collectively taps into the power of thousands of processors distributed across more than 30 participating universities and federal research laboratories. UW-Madison computer scientist Miron Livny, leader of the Condor Project, is principal investigator of OSG and will be in charge of building, maintaining, and coordinating software activities. [OSG Press Release][DDJ Article][Badger Herald Article]

\"Setting up a Condor cluster\" at Linux.com

Author M. Shuaib Khan offers a brief introduction to setting up Condor at Linux.com. He writes, “Condor is a powerful yet easy-to-use software system for managing a cluster of workstations.”

IBM publishes article "Manage grid resources with Condor web services"

IBM developerWorks has published a very nice tutorial on using Condor’s web services interface (Birdbath). Jeff Mausolf, IBM Application Architect, states “This tutorial is intended to introduce the Web services interface of Condor. We’ll develop a Java technology-based Web services client and demonstrate the major functions of Condor exposed to clients through Web services. The Web services client will submit, monitor, and control jobs in a Condor environment.”

Condor used to research genetic diseases

“Sleeping Computers Unravel Genetic Diseases.” “Now, with the help of the Condor middleware system, Superlink-Online is running in parallel on 200 computers at the Technion and 3,000 at the University of Wisconsin-Madison.” “‘Over the last half year, dozens of geneticists around the world have used Superlink-Online, and thousands of runs – totaling 70 computer years – have been recorded,’ says Professor Assaf Schuster, head of the Technion’s Distributed Systems Laboratory, which developed Superlink-Online’s computational infrastructure.

UW holds Condor meeting

The Wisconsin State Journal briefly covered Condor Week 2006. “Condor gives UW-Madison a real edge in any competitive research, including physics, biotechnology, chemistry and engineering, said Guri Sohi, computer sciences department chairman.”

Registration open for European Condor Week 2006, June 26-29, Milan, Italy

Registration is now open for European Condor Week 2006. This second European Condor Week is a four day event that gives Condor collaborators and users in the chance to exchange ideas and experiences, learn about latest research, signup for detailed tutorials, and celebrate 10 years of collaboration between the University of Wisconsin-Madison Condor Team and the Italian Instituto Nazionale di Fisica Nucleare (INFN - the National Institute of Nuclear Physics). Please join us!

A Peek into Micron's Grid Infrastructure

In an interview for GRIDtoday, Brooklin Gore discusses Micron’s Condor-based grid. Gore’s comments included “We have 11 ‘pools’ (individual grids, all connected via a LAN or WAN) comprising over 11,000 processors at seven sites in four countries. We selected the Condor High Throughput Computing system because it ran on all the platforms we were interested in, met our configuration needs, was widely used and open source yet well supported.”

Simulating Supersymmetry with Condor

An article in Science Grid This Week describes how Condor combined with resources from the Open Science Grid and the Univ of Wisconsin Condor Pool provided over 215 CPU years in less than two months towards a discovery eagerly anticipated by particle physicists around the world.

GAMS and Condor

A recent advertisement for GAMS, (a high-level modeling system for mathematical programming problems) features Condor.

Announcing the International Summer School on Grid Computing 2006

If you are interested in learning about grid technology (including Condor) from leading authorities in the field, we encourage you to investigate the International Summer School on Grid Computing. The School will include lectures on the principles, technologies, experience and exploitation of Grids. Lectures will also review the research horizon and report recent significant successes. Lectures will be given in the mornings. In the afternoons the practical exercises will take place on the equipment installed at the School site in Ischia, Italy (near Naples). The work will be challenging but rewarding.

Digital Aerial Solutions (DAS) uses Condor

to help increase image processing capacity, as detailed in this Leica Geosystems Geospatial Imaging press release.

Communicating outside the flock, Part 2: Integrate grid resources with Condor-G plus Globus Toolkit" at IBM's developerWorks

IBM IT Architect Jeff Mausolf writes, “The Globus Toolkit provides a grid security infrastructure. By augmenting this infrastructure with the job submission, management, and control features of Condor, we can create a grid that extends beyond the Condor pool to include resources controlled by a number of resource managers, such as LoadLeveler, Platform LSF or PBS.”

New ETICS project to improve quality

The goal of the ETICS (eInfrastructure for Testing, Integration and Configuration of Software) project is to improve the quality of Grid and distributed software by offering a practical quality assurance process to software projects, based on a build and test service. Please see the news release.

Formation of a Condor Enterprise Users Group

Enterprise users of Condor are joining together to form a group. If you are a business user of Condor you may be interested to learn that an effort is underway to form a Condor Enterprise Users Group. The goal of this community is to: 1- Focus on the unique needs of enterprise/commercial/business users of Condor; 2- Share best practices in the enterprise Grid computing space; 3- Collaborate on Condor features needed to better support the enterprise space; 4- Discuss ways to support the Condor project for enterprise-focused activities. To learn more, read the announcement posted to the condor-users list. Subscribe to the group at mail-lists/.

Communicating outside the flock, Part 1: Condor-G with Globus at IBM's developerWorks

IBM IT Architect Jeff Mausolf writes, “In this article, we will look at how you can use Condor to simplify the tasks associated with job submission, monitoring, and control in a Globus environment. In Part 2, we will look at how you can use Condor’s matchmaking to make intelligent scheduling decisions based on job requirements and resource information, and then leverage the remote resource access capabilities provided by Globus to submit jobs to resources that are not part of the Condor pool.” Also available is “Part 2: Integrate grid resources with Condor-G plus Globus Toolkit.”

\"Building a Linux cluster on a budget\" from Linux.com

Article author Bruno Gonçalves says “By adding the Condor clustering software we turn this set of machines into a computing cluster that can perform high-throughput scientific computation on a large scale.”

Press release from Canadian environmental minister

entitled “Minister Dion Launches WindScope To Support Government’s Wind Energy Commitment”. The new WindScope software that utilizes Condor allows users to determine the ideal location to install wind turbines.

See Jeff Vance's article "Finding the Business Case for Grids"

in the CIO Update for a discussion of The Hartford’s recent work with Condor. Chris Brown, director of advanced technologies at Hartford Life said, “But the alternatives were expensive, and the ability to scale with a grid was much better,” and “It wasn’t the principal reason we for building our grid, but versus a more conventional solution, the grid has saved us millions of dollars.”

Read about The Sweet Sound of Grid Computing

in Brooklin Gore’s article in Grid Today.

Optena launches a Condor Knowledge Base and Support Site

Optena Corporation has recently announced on the Condor-Users Mail List the launching of a Condor Knowledge Base and Support web site, for use by the entire Condor community.

Grid Computing with Condor in the insurance industry

This cover story in TechDecisions for Insurance magazine profiles experiences of organizations in the insurance industry with Condor and grid computing.

Condor provides cycles to the TeraGrid at RCAC

This TeraGrid press release explains how the Rosen Center for Advanced Computing (RCAC) at Purdue University has opened up access to 11 teraflops of computing power to the TeraGrid community by using Condor.

the Rosen Center for Advanced Computing

Read about the Rosen Center for Advanced Computing (RCAC) at Purdue University in the Teragrid news release.

"Twenty Years of Condor" by Katie Yurkewicz highlights Condor."

In Science Grid This Week: “The Condor idea, which had its root in Livny’s Ph.D. research on distributed computing systems, is that users with computing jobs to run and not enough resources on their desktop should be connected to available resources in the same room or across the globe.” hpcu

Alain Roy is in the news.

“Alain Roy: Providing Virtually Foolproof Middleware Access,” by Katie Yurkewicz in Science Grid This Week profiles Condor staff member Alain Roy.

GLOW

The Grid Laboratory of Wisconsin (GLOW) is in the news. Read about it in News@UW-Madison and the EurekAlert press release.

Read "Coraid in Hungarian Grid Project"

This article in Byte and Switch mentions that the Hungarian ClusterGrid Project is using Condor’s flocking support.

Himani Apte

Graduate student Himani Apte is a finalist for the Anita Borg Memorial Scholarship sponsored by Google. Here is Google’s press release. Congratulations!

the Render Queue Integration

| Read about the Render Queue Integration on Cirque | Digital, LLC’s Products web page</a>. From the page “With GDI | Explorer you can submit, manage and prioritize the rendering of your 2D and 3D files directly on an unlimited number of processors using the powerful - yet free - Condor render queue. – The power of all processors on your network is at your fingertips!” |

"UW Condor project is a piece of CERN's massive grid computer"

at the Wisconsin Technology Network. “The University of Wisconsin’s Condor software project will provide a component of a cutting-edge grid computing system at European research heavyweight CERN, the IDG news service reports.” “After finding no commercial grid applications that satisfied all its needs, CERN took to cobbling together a system from a variety of sources, starting with the Globus Toolkit from the Globus Alliance and using the Condor project’s scheduling software.”

Condor Week 2005

We recently held our annual Condor Week conference. A survey for attendees, photos, and presentations are now all available for your perusal.

"CERN readies world's biggest science grid"

in Computerworld. “Instead, CERN based its grid on the Globus Toolkit from the Globus Alliance, adding scheduling software from the University of Wisconsin’s Condor project and tools developed in Italy under the European Union’s DataGrid project.”

Hartford Life is in the news